Introduction to WAN 2.6 (Preview)

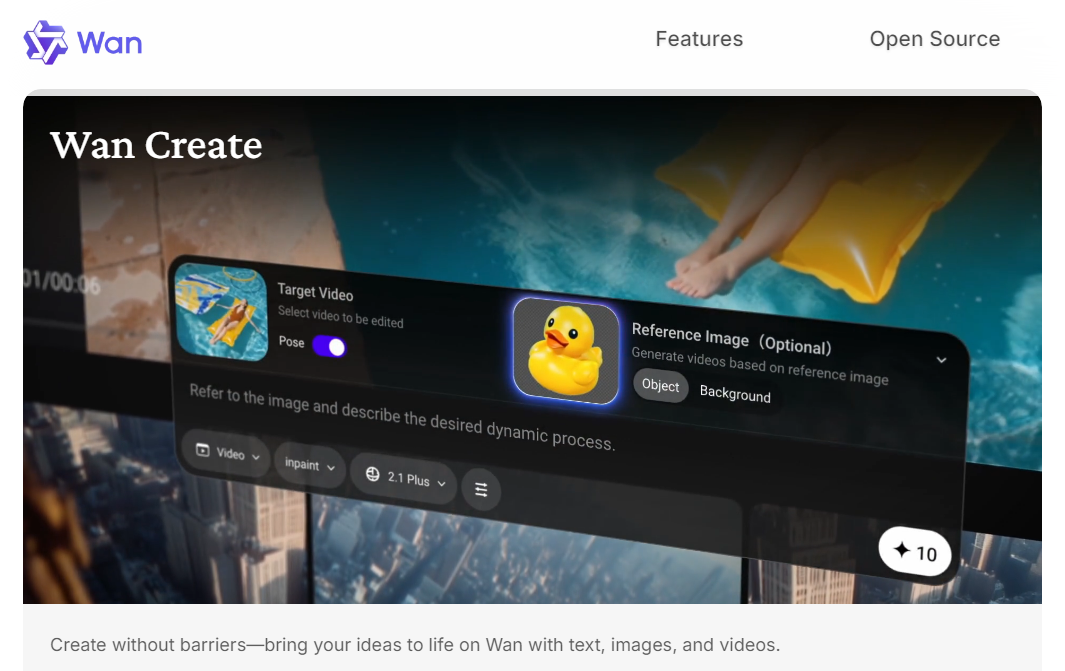

Alibaba’s WAN models have quickly become some of the most talked‑about AI video generators. WAN 2.1 gained attention for realistic visuals and strong benchmark performance, while WAN 2.5 introduced a major leap: 10‑second, 1080p, 24‑fps videos with native audio‑visual sync, driven by a new multimodal engine that accepts text, images, video, and audio as inputs.

At the time of writing, WAN 2.6 has not yet been officially released by Alibaba. However, based on the clear direction outlined in the WAN 2.5 announcement—longer clips, synchronized sound, and stronger camera control—it’s reasonable to expect WAN 2.6 to push even further toward cinematic AI video creation.

In this preview, we’ll look at what creators may be able to expect from WAN 2.6 AI video: likely key features, how it might improve everyday workflows, and the kinds of use cases where this next‑generation text & image‑to‑video AI could shine.

What Creators Can Expect from WAN 2.6 (Predicted)

Note: The following features are speculative, based on WAN 2.5’s official capabilities and typical upgrade patterns in modern AI video models.

1. Longer, More Coherent AI Video Clips

WAN 2.5 already doubled video length from 5 seconds to 10 seconds while keeping 1080p, 24‑fps quality.

It’s reasonable to expect WAN 2.6 to continue this trend by:

- Extending maximum clip length beyond today’s 10‑second limit

- Improving narrative coherence across the full sequence

- Reducing temporal artifacts such as flicker or dropped frames in longer shots

For creators, this would mean more room for multi‑beat actions, mini narratives, or product sequences within a single AI video model run.

2. Smarter Text‑to‑Video Understanding

WAN 2.5 already supports “movie‑level visual control” and professional camera language—lighting, color, composition, and shot type—directly from prompts.

WAN 2.6 text‑to‑video will likely deepen this:

- Better understanding of complex, multi‑clause prompts

- More accurate execution of specific camera moves (tracking shots, zooms, POV)

- Stronger alignment between emotional tone in the prompt and the resulting scene

This would make WAN 2.6 AI video more suitable for structured storytelling and script‑driven content, not just short visual experiments.

3. Stronger Image‑to‑Video Identity and Motion

The official WAN 2.5 notes highlight more stable dynamics and better preservation of existing features—style, faces, products, and text—when converting images into videos.

Building on that, WAN 2.6 image‑to‑video is likely to:

- Keep character identity consistent even with more dramatic motion

- Handle complex movements (turns, jumps, fast camera motion) with less distortion

- Maintain logos, UI elements, and fine details more reliably during animation

This would be particularly useful for turning product renders, character concepts, or UI mockups into smooth animated sequences with minimal cleanup.

4. More Expressive Audio and Voice‑Driven Video

WAN 2.5 is the first in the series to offer audio‑visual synchronized video generation, producing human voices, sound effects, and background music that match on‑screen content and lip movements.

WAN 2.6 is therefore likely to:

- Refine lip‑sync for more natural speech and emotional nuance

Add richer ambient soundscapes and more varied SFX libraries - Improve “audio‑driven” workflows, where a voice track can drive both motion and expression in the video

For creators who rely on AI video with audio, that could mean closer‑to‑final clips straight from the model, with less need for separate voiceover or music production.

5. Tighter Multimodal Control and Editing Workflows

WAN 2.5’s native multimodal architecture already supports text, image, video, and audio as both inputs and outputs.

The next step for WAN 2.6 might include:

- More flexible combinations of text + image + audio prompts

- Basic edit‑style capabilities—such as extending an existing shot, adding new motion, or adjusting style based on a reference

- Easier reuse of characters or assets across multiple shots for simple multi‑shot sequences

If these predictions hold, WAN 2.6 could feel less like a “single shot generator” and more like a compact AI video creation system for short, coherent sequences.

Use Cases for WAN 2.6 AI Video

Again, these use cases are speculative, but they follow naturally from WAN’s current direction and from what the WAN 2.5 release has already made possible.

1. Short Social‑First Video with Native Audio

With longer clips and better audio‑visual sync, WAN 2.6 will likely be ideal for:

- TikTok / Reels / Shorts content with dialogue or voiceover

- Product teasers with integrated sound design

- Reaction or commentary‑style clips driven by a voice track

Creators could describe the scene and tone, attach a short audio sample, and let WAN 2.6 AI video generator handle both visuals and sound in one pass.

2. Voice‑Driven Performance and Talking‑Head Content

WAN 2.6 is expected to be the next major AI video model from Alibaba, building on WAN 2.5’s 1080p, audio‑synced text‑to‑video AI to deliver smarter storytelling, longer clips, and more stable image‑to‑video generation. Explore predicted WAN 2.6 features, use cases, and what creators can expect from this upcoming AI video generator.2.5 already supports “voice‑to‑video,” where a single image plus audio can produce a performance video with detailed facial and body motion.

WAN 2.6 is likely to extend this for:

- Talking‑head explainers and educational clips

- Virtual host or presenter videos

- Character performances (cartoon or realistic) synced to recorded dialogue

This would give educators, influencers, and brands a fast way to produce on‑camera style content without setting up a physical shoot.

3. Concept, Product, and Scene Animation

With more stable image‑to‑video and longer durations, WAN 2.6 will probably be strong for:

- Turning product images into simple demo videos

- Bringing environment or key‑art concepts to life with camera motion

- Creating short “hero shots” for landing pages, ad creatives, and campaigns

These AI video creation workflows help replace or augment classic 3D or live‑action shoots for simpler scenarios.

4. Previz and Story Exploration

If WAN 2.6 continues to improve camera control and narrative coherence, it could be useful for:

- Visualizing script ideas or storyboards

- Testing different visual directions before full production

- Quickly prototyping ad sequences or short narrative arcs

For creators and teams, this turns WAN 2.6 video model into a low‑friction sandbox for experimenting with pacing, framing, and mood.

Conclusion

While WAN 2.6 has not yet been officially released, it is currently expected to launch in December, continuing Alibaba’s push toward more powerful, multimodal AI video generation and smarter storytelling. For creators, that likely means longer, more coherent clips, better audio‑visual sync, and a more capable AI video model for real production workflows.

Akool will integrate WAN 2.6 into its AI video suite as soon as it becomes available, so you can experiment with the new WAN 2.6 AI video capabilities at the very first opportunity.

Stay tuned, and look forward to creating your next wave of smart, cinematic content with WAN 2.6 on Akool.