Introduction: What’s Next for Seedance 1.5?

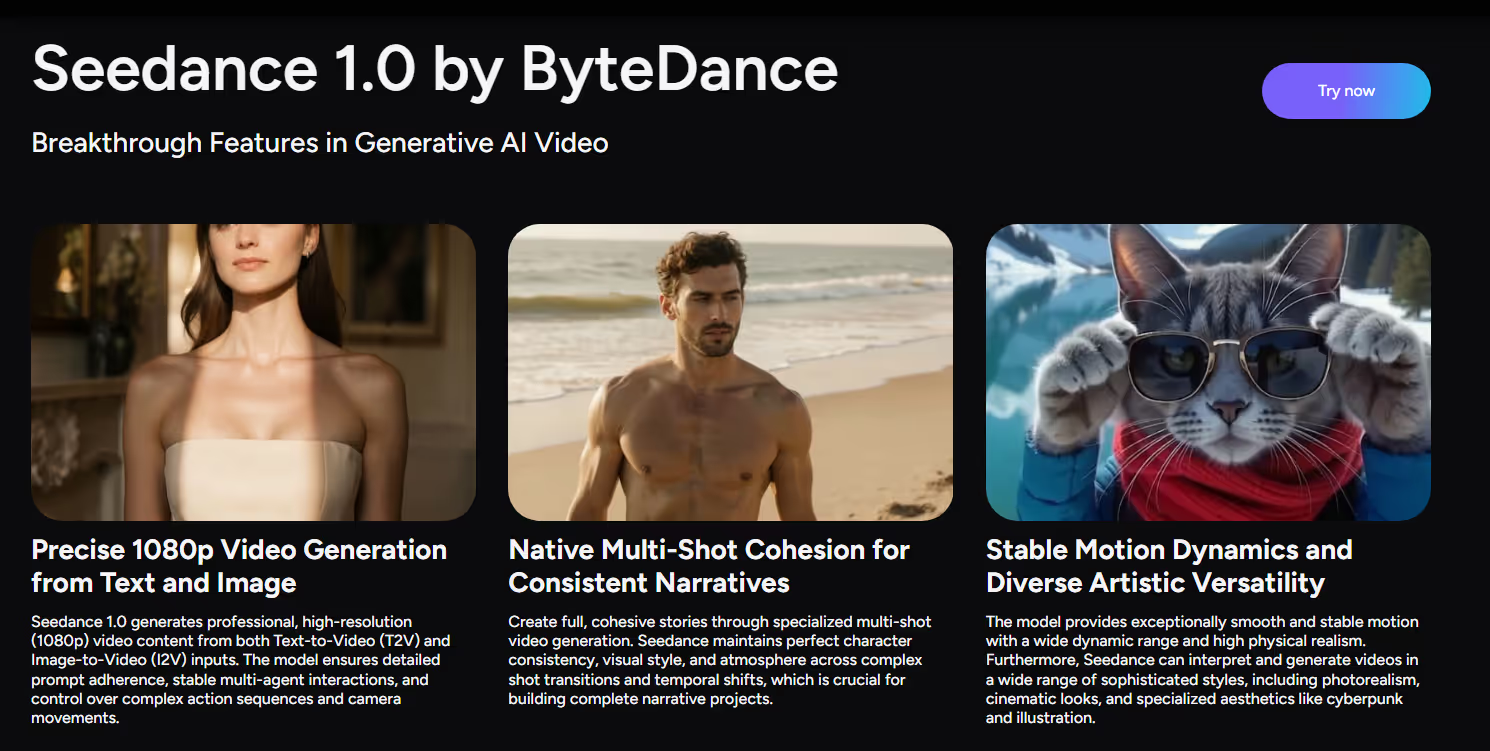

Seedance has quickly become one of the most talked‑about names in AI video generation. The current Seedance 1.0 family already supports multi‑shot video from both text and image inputs, delivering 1080p clips with smooth motion, strong semantic understanding, and impressive prompt following. In short, Seedance today is a fast, high‑quality AI video generator built for cinematic short‑form storytelling.

The next evolution of this line has not been officially released yet, but based on Seedance’s existing technical report and the broader direction of video foundation models, it’s reasonable to expect that the upcoming Seedance 1.5 AI video model will go beyond just longer and smoother clips. Two especially exciting areas for creators are likely to be:

- Audio‑visual synchronization — generating video and sound together

- Video reference — using existing footage to guide motion, style, or continuity

In this preview, we’ll explore what creators can expect from the next generation of Seedance: predicted key features and practical use cases, using today’s Seedance capabilities as the launch pad.

What Creators Can Expect (Predicted)

Important: The features below are predictive, extrapolated from current Seedance 1.0 documentation and industry trends. They are not yet officially announced.

1. Longer, More Coherent Multi‑Shot Storytelling

Seedance 1.0 already stands out for multi‑shot narrative coherence, with seamless transitions and consistent subjects across shots.

The upcoming model is likely to:

- Support longer multi‑shot sequences than current 5s–10s defaults

- Improve temporal stability, reducing flicker over extended clips

- Maintain clearer story flow across multiple cuts and scenes

For creators, this means more room for complete mini‑stories, multi‑scene ads, and short narrative arcs in a single AI video generation pass.

2. Smarter Text‑to‑Video and Image‑to‑Video Control

Today’s Seedance supports both text‑to‑video (T2V) and image‑to‑video (I2V), letting creators animate scenes from prompts or still images at up to 1080p.

The next model will likely enhance this with:

- Better handling of complex, multi‑step prompts (“three shots: wide cityscape, then close‑up character, then product hero”)

- More precise camera language controls: close‑ups, tracking shots, handheld, slow zooms

- Tighter integration of image references for style, layout, and character control

This would make the next Seedance release a more expressive text‑to‑video AI and image‑to‑video AI for creators who want their written instructions to map directly to camera moves and composition.

3. Audio‑Visual Synchronization (Predicted)

One major frontier for the next Seedance model is likely audio‑visual synchronization.

Seedance 1.0 already solves visual challenges—multi‑shot coherence, stable motion, and fast rendering—but it outputs silent clips. The logical next step, in line with trends in other foundation models, is an AI video model that can generate:

- Spoken narration or dialogue

- Ambient soundscapes (street noise, nature, interiors)

- Sound effects aligned with on‑screen events

- Simple music or tonal beds

all synced to the video in a single pass.

If audio‑visual sync arrives in the next Seedance version, creators could:

- Generate social‑ready video with built‑in sound, rather than editing audio separately

- Produce explainer‑style clips with AI voiceover plus motion

- Prototype story ideas where pacing, visuals, and sound evolve together

This would turn Seedance from a silent AI video generator into a more complete AI video creation system.

4. Video Reference for Motion and Style (Predicted)

Another likely upgrade is video reference support: the ability to feed short reference videos into the model and let them guide the motion, style, or structure of new clips.

Building on Seedance’s existing strengths in multi‑shot storytelling and image guidance, video reference could allow creators to:

- Use a live‑action clip as a template for camera moves and timing

- Transfer motion patterns (e.g., a walk cycle, camera pan, or action beat) into a newly generated scene

- Maintain consistent framing and pacing across multiple AI‑generated variants of a sequence

For AI video generation, video reference would be a powerful tool to bridge between traditional footage and fully synthetic content—especially helpful for concepting, previz, and stylistic experiments.

5. Even Faster and More Efficient AI Video Generation

Seedance’s technical report highlights a ~10× speedup over many competitors, thanks to multi‑stage distillation and system‑level optimization.

It’s reasonable to expect the next model to:

- Maintain or improve this high‑speed inference

- Offer more control over quality vs. speed (e.g., “fast draft” vs. “cinematic render”)

- Support higher concurrency for bulk AI video generator workloads

For creators and teams, that translates into more iterations in less time—great for testing hooks, story variations, or visual directions.

How Creators Might Use the Next Seedance 1.5 Model

Once the next Seedance model arrives with these expected upgrades, here’s how those features could translate into real‑world AI video creation workflows.

1. Social & Campaign Videos with Built‑In Sound

With predicted audio‑visual synchronization, creators could use Seedance to:

- Generate TikTok/Reels/Shorts content where visuals and sound arrive together

- Produce promotional clips where product shots, motion, and audio cues are aligned

- Quickly test different narrative and audio combinations without a full edit pass

This makes text‑to‑video AI more “publish‑ready” for sound‑on platforms.

2. Video‑Reference‑Driven Motion and Style

If video reference is supported, the next Seedance model could be ideal for:

- Mimicking the pacing and camera moves of a reference ad or teaser

- Translating a rough storyboard or test shoot into a polished AI‑generated version

- Keeping motion and timing consistent while changing style, setting, or characters

For creators, this bridges manual shooting and fully synthetic Seedance AI video, enabling hybrid workflows.

3. Multi‑Shot Storyboards and Concept Trailers

With longer, more coherent multi‑shot narratives and stronger T2V/I2V control, Seedance could help:

- Turn script snippets into quick video storyboards

- Build concept trailers for products, games, or films

- Explore different visual styles for the same story beats

This lowers the barrier to exploring complex ideas visually before committing to full production.

4. Image‑to‑Video Motion for Demos and Explainers

Using enhanced image‑to‑video AI and possible audio sync, the next Seedance model would be a strong fit for:

- Animating product renders into explainer clips with narration

- Bringing UI mockups to life with guided camera moves and click flows

- Turning character art into motion tests with synced sound cues

These workflows help teams move from static designs to dynamic presentations quickly.

Conclusion

The next generation of Seedance 1.5 AI video has not been officially released yet, but the roadmap is easy to infer from Seedance 1.0’s strengths: cinematic 1080p text‑to‑video and image‑to‑video, multi‑shot narrative coherence, and fast, efficient AI video generation.

Looking ahead, creators can reasonably expect:

- Longer, more coherent multi‑shot stories

- Smarter control over prompts, camera language, and visual styles

- Audio‑visual synchronization for native sound, voice, and effects (predicted)

- Video reference to guide motion and style from existing footage (predicted)

- High‑speed inference that keeps iteration cycles fast

While we wait for an official announcement, the best preparation is to explore what Seedance already does today: multi‑shot, cinematic AI video creation from text and images. That way, you’ll be ready to plug the next‑generation model into your workflow as soon as it arrives.

Keep an eye on the official Seed and Seedance channels for release updates—and get ready to experiment with audio‑synced, reference‑driven Seedance AI video storytelling when the new model goes live.